DroidSonic

Audio Framework for Android Automotive OS

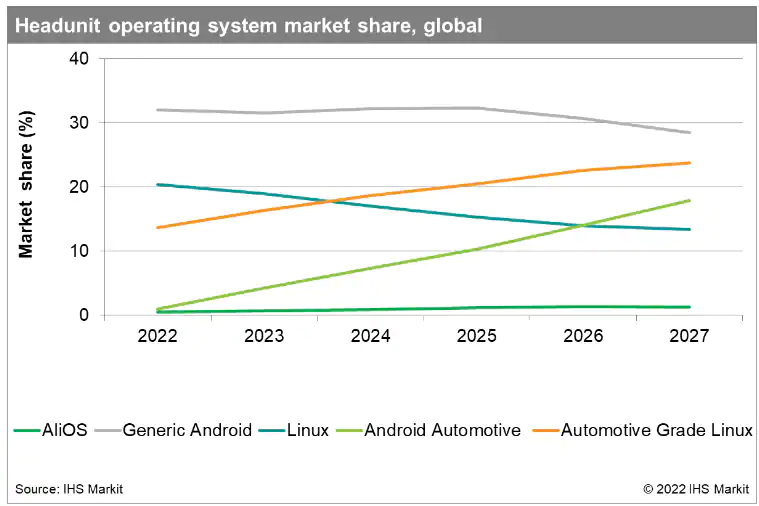

Android Automotive is gaining more and more attraction as an operating system for automotive infotainment headunits replacing both standard Android and custom Linux-based devices in the near to mid future.

The well-defined and already market-proven framework for apps(e.g. for charging electric cars or booking a spot in a parking lot) along with a powerful set of standard applications(such as Google Maps) and services drives the success enabling the automotive OEM to focus on the user interface and additional services specific to the infrastructure of it’s vehicles.

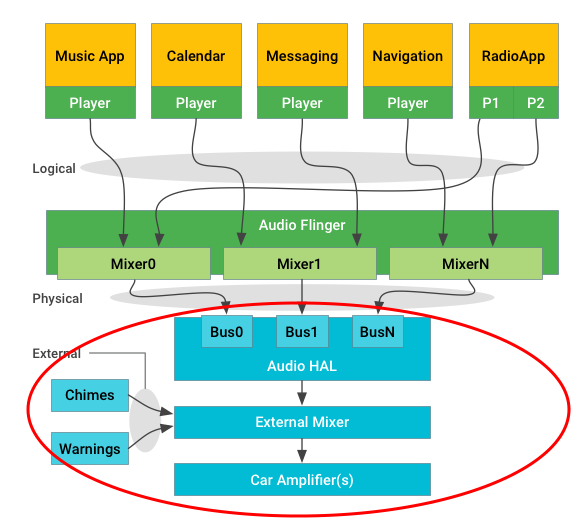

One critical component is the audio system of the car, and Google does not deliver any automotive-specific audio processing solutions here. Instead, Android Automotive OS merely defines simple audio interfaces for audio input and output to/from the Android framework.

The so-called external mixer is not just the mixer, but rather contains the entire audio processing of the entire vehicle, even for multiple audio zones: from user EQ, speaker EQ, chime, and tone generation to advanced processing such as active noise cancellation (ANC/RNC), microphone beamforming, or engine sound synthesis.

Constraints for Audio on Android Automotive OS

Two vital limitations are difficult to solve within the Android software stack:

a) high latencies

b) safety compliance

Especially for voice processing and for ANC/RNC, low latency is critical to tackle system-intrinsic real-time requirements, for example to detect transients in the recorded microphone signal and generate anti-noise in near real-time. Additionally, low-latency processing requires audio processing block length of 8 samples @48kHz or even less. A full-blown OS is not well suited for such tasks as many context switches are required until the relevant software module is actually executed. Additionally, commonly-used Cortex-A processors have lengthy execution pipeline, leading to pipeline stalls and performance loss.

Additionally, chimes and warning sounds (e.g. to inform the driver to regain control of the steering wheel) are safety-relevant requiring an analysis and assessment of the entire software chain to ensure safety-compliance from audio/DMA interrupt handling to execution of the underlying signal generator algorithms.

This can either be solved by running the audio framework in a separate machine of hypervisor, or, as most of the modern system-on-chips(SoCs) suited for car infotainment applications provide, dedicated DSP cores for performing audio processing running an real-time operating system.

DroidSonic – purpose and context

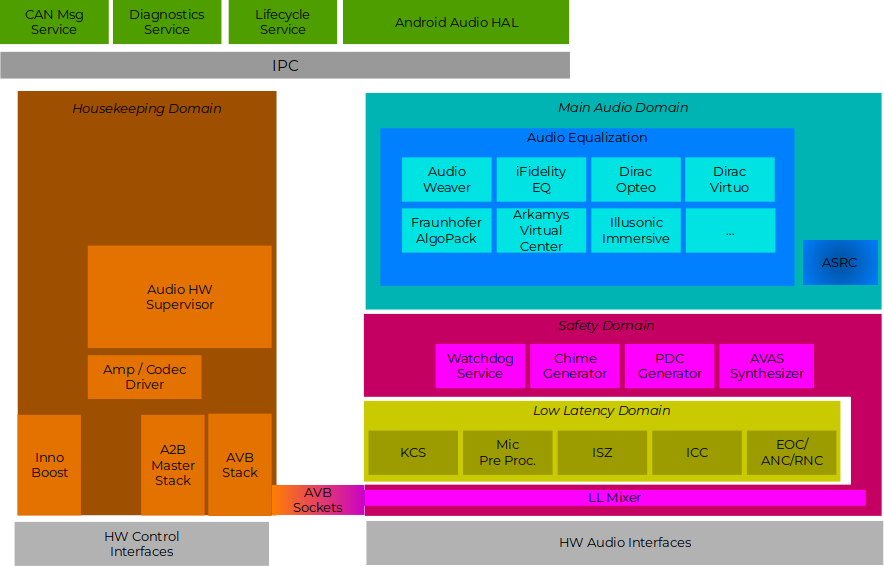

The DroidSonic audio framework needs to bring several processing domains together, each of which fulfills specific purposes:

a) Safety domain

All safety-relevant signal are generated and mixed into the output stream within this domain. This code must be safety-certified according to the required ASIL level, which is often a problem for IP-protected 3rd party algorithms. The safety domain often runs on a separate completely separate CPU.

b) Low-latency domain

This domain contains all algorithms that operate on a small audio block size to fulfill low latency requirements, such as ANC/RNC/EOC or microphone pre-processing for telephony or voice recognition.

The low latency domains feed audio into the safety domain, so the safety domain is also required to work with small block sizes.

c) Main processing domain

Generally speaking, all algorithms that neither have particular safety relevance nor require low latency shall be processes with this domain. As mentioned above, modern CPUs and DSPs have long pipelines and dedicated loop processing instructions to reduce CPU cycles significantly on large audio block sizes of 256 samples/block or more. Particulary CPU-intensive algorithms, such as FIR filter banks or FFTs, should therefore be run in this main processing domain.

For maximum efficiency, code generated via external frameworks for which safety-compliance can not be guaranteed, such as AudioWeaver or SigmaStudio, should be run in this domain.

This means DroidSonic does not compete with AudioWeaver or SigmaStudio – it embeds them into a wider context, also with regard to different asynchronous clock domains.

Why DroidSonic ?

DroidSonic enables audio processing for Android Automotive OS – it brings all standard algorithms for car-specific audio equalizing to the table, as well as everything needed to integrate 3rd-party algorithms and connect them with one another to create a full-featured audio solution – regardless of whether or not algorithms work on different block sizes or sources and sinks reside in different clock domains.

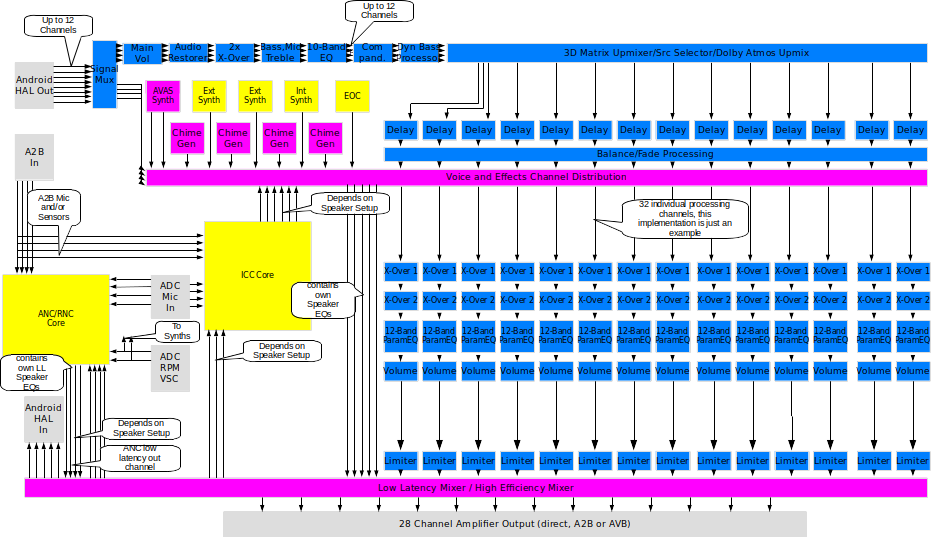

Following figures illustrates an example implementation, color-coded for each different audio domain:

- Yellow: Low-latency domain

- Magenta: Safety domain

- Blue: Main processing domain

- Gray: Audio I/O (each may reside in different clock domains)

Safety and Efficiency

The DroidSonic source code can additionally be certified to fulfill safety requirements

DroidSonic is written in embedded C++ using core-specific assembler for all CPU-intensive algorithms such as IIR and FIR filters. A encapsulation scheme guarantees minimal re-certification efforts when porting DroidSonic to a new CPU/DSP architecture.

DroidSonic’s algorithms and buffers are optimized during compile and link time, so routines can be optimized for the configured block sizes in each of the three audio domains (e.g. to prevent unnecessary loop unrolling or intermediate buffer copying).

Inter-processor communication

The handover of audio data from/to the Android framework to the audio framework is also SoC- or ´system-specific. If the DSP is integrated into the SoC the IPC mechanisms are based on shared memory and some interrupt mechanism , which are encapsulated into a vendor-specific API for the exchange of command and control data.

For external DSPs located in the head unit audio is exchanged using hardware audio interfaces (e.g. SPORT, (e)SAI, McASP, …). Command and control is realized via additional interfaces, such as UART, SPI or I2C. For DSPs located in remote amplifier ECUs, those interfaces can be extended by A2B. For OABR-connected amplifiers, audio is exchanged using IEEE 1722 communication, with command and control implemented via TCP network socket or RPC (e.g. Some/IP).

For audio data, DroidSonic provides an abstract push-/pull mechanism which can easily be interfaced with existing peripheral drivers or even higher-layer subsystems such as ALSA. Control data is encapsulated and serialized into Google Protocol Buffer messages and can easily be ported to various interfaces. A Some/IP implementation is additionally available.

More information

For more information, a list of integrated algorithms, performance figures, or licensing terms, please contact us.